In December 2013 the House of Commons Select Committee on Science and Technology announced an inquiry into the efficacy of screening for cancer. ‘The NHS spends a significant amount of money on health screening,’ Andrew Miller, the chair of the committee, said, ‘and it is important that this is underpinned by good scientific evidence.’ But the awkward truth is that much of the evidence is contested. The argument over breast cancer screening has been going on for decades, often bad-temperedly, and concerns not just the efficacy of the screening itself but its potential to do harm as well as good.

Cancer does not develop all at once: it emerges gradually from a sequence of changes, the earliest of which do not inevitably lead to malignant disease. Breast cancer begins in the cells lining the ducts that carry milk from the mammary gland to the nipple or, less commonly, in the lobular clusters of alveoli containing milk-secreting cells in the gland itself. It starts when the DNA that controls the behaviour of a cell is altered, or when the chemistry that regulates the expression of the DNA is changed. What happens next can be described as a process of evolution. When the DNA in a normal cell mutates, the cell acquires new capacities. A tumour is not just a mass of such cells: it isn’t unusual for less than 20 per cent of the cells in an aggressive breast tumour to be cancer cells, in the sense that they carry the altered DNA. Cancer cells acquire, through mutation, the power to recruit neighbouring normal cells to aid the growth of the tumour, building a network of blood vessels that supply it with oxygen and nutrients.

A cancer that will go on to kill its host must spread beyond the duct, first acquiring the power to disrupt the arrangement of the cells that form the duct wall. It can then invade the surrounding tissue and infiltrate the lymphatic system (the network of capillaries and ducts that recycles blood plasma to the heart), finally colonising vital organs. One of the difficulties with breast cancer screening is that about 20 per cent of the cancers detected are ‘ductal carcinoma in situ’ or DCIS, a precursor of cancer rather than the thing itself. In some cases – it is hard to say exactly how many – these cells will not acquire the capacity to spread and do harm. A woman might benefit from having her DCIS detected before it has acquired the capacity to hurt her, or she might end up being subjected to harmful treatment for a disease she doesn’t yet have, and may never have.

In the UK, screening for breast cancer is carried out by mammogram. The breast is compressed between plates and an X-ray image taken. Contrast in X-ray images varies according to the density of the materials photographed; the denser the material, the whiter it appears on the image. (Women under fifty are not screened as part of the national programme because cancer is less common in this age group and because younger women have denser breast tissue, which makes mammograms harder to read.) In a mammogram the contrast is between fat, which is more or less transparent to X-rays, and other tissues, which appear either as overlapping fibrous strands or as a dense cloud. These form a complex background against which the signs of cancer must be discerned. The signs are subtle and ambiguous. The most common are masses and microcalcifications. Masses appear either as round opaque shapes or as a set of radiating spikes which can easily be confused with normal tissues. Microcalcifications are small flecks of calcium salts, highly opaque to X-rays, which are deposited in the breast either as part of a normal process of change or as a consequence of cancer; sometimes it is possible to classify them as benign or malignant from their appearance on the mammogram, sometimes not.

All mammograms are read and reread in an effort to get accurate results, but mammography is not, as most radiologists would admit, a perfect test. Only around a third of the cancers detected in women over fifty are found at screening. Studies of cancers detected in women who had been given the all-clear at screening regularly find that 20 or 30 per cent of those cancers were missed on the screening mammogram. For now, however, we don’t have a better test. When mammography works, the cancer might be detected before it spreads, and that sometimes makes more effective treatment possible. But if you didn’t have cancer; if your cancer was missed on the mammogram; if your cancer had already spread before it showed up on the mammogram; if without screening you would have noticed a breast lump before your cancer had spread; if you would have survived the treatment for an advanced cancer; if you will not survive treatment for an early cancer; if you were always going to die of something else before the cancer got you: in all these cases, you will not have benefited from being screened. Although screening might reduce mortality, some argue that the gains are too slender to outweigh the costs.

Mammography has been used as a tool for screening healthy women since the 1960s, but its use was stepped up dramatically in the 1980s. In the US, Ronald Reagan’s announcement in 1987 that his wife, Nancy, had been diagnosed with cancer following a screening mammogram made a significant impact; by 1991, most states had passed legislation requiring insurance companies to cover the cost of mammograms. In the UK, a national screening programme was set up in 1988. Even in the early days there were worries. In 1985 a survey in the US found that 36 per cent of mammography facilities were producing images of unacceptable quality. Maureen Roberts, who led the Edinburgh Breast Cancer Screening Project until she herself died from the disease in 1989, wrote in an article published after her death that ‘we can no longer ignore the possibility that screening may not reduce mortality in women of any age, however disappointing that may be.’ Michael Baum, now emeritus professor of surgery at University College London, was instrumental in setting up the UK programme, but resigned from the governing committee of the NHS Breast Screening Programme in 1997, arguing that the information disseminated by the programme about the risks and benefits of screening was misleading. In February this year, the Swiss Medical Board published a report recommending that no new mammography screening programmes be introduced and that the existing ones be wound up.

Screening has been seen by some as an instance of the increasing intrusion of medicine into the lives of healthy people; one early critic, Petr Skrabanek, described it as a technique of ‘coercive healthism’, a demonstration of the power of the state over individuals. Others are suspicious of profit-hungry corporations and the vested interests of doctors and breast cancer charities. But the principal debate turns on two questions. First, is screening effective in saving lives? Since screening programmes were introduced, deaths from breast cancer have fallen. However, since new treatments such as tamoxifen became available at around the same time as screening took off, it isn’t easy to tell what the effect of screening has been.

The most reliable form of clinical evidence is the randomised controlled trial.* The most authoritative basis for judging the efficacy of a medical intervention such as screening is a ‘meta-analysis’ of all sufficiently rigorous relevant trials. In 1993 a global collaboration of researchers, named after the Scottish epidemiologist Archie Cochrane, was set up to put together a library of systematic reviews and meta-analyses, so that clinical interventions and policy decisions could be based on the evidence. Peter Gøtzsche, a Danish endocrinologist who had studied bias in trials of anti-arthritic drugs, was an early recruit, and left clinical practice to set up the Nordic Cochrane Centre in Copenhagen. In 1999, five weeks before the Danish government was expected to vote in favour of establishing a national breast cancer screening programme, the Danish Board of Health received a letter from senior clinicians questioning the move. The board contacted Gøtzsche, who, despite having no prior expertise in the field, took just four weeks to produce an 11-page report highly critical of the existing evidence on screening. Shortly afterwards, Gøtzsche and Ole Olsen submitted a version to the Lancet, which published it the following year.

Trials of mammographic screening are expensive: tens of thousands of participants are required (since only 7 per cent of them will develop cancer), and all of them must be monitored for ten or even twenty years. So far, there have been only eight trials. The first was in New York in 1963. There followed trials in Malmö, starting in 1976; the Two Counties trial, also in Sweden, in 1977; Edinburgh in 1978; Canada in 1980; Stockholm in 1981 and Göteborg in 1982; and the UK Age trial, which looked at younger women, in 1991. The Swedish trials provided what seemed to be clear evidence in favour of screening, prompting countries across the developed world to introduce national programmes. The Two Counties trial was the largest, with two Swedish counties divided into 45 municipalities, 26 of which were randomly selected to be allocated a screening programme. Women between 50 and 74 were invited for screening every three years, younger women every two years. All those diagnosed with breast cancer were followed up and any deaths recorded. By 1984, a 31 per cent reduction in deaths from breast cancer was observed in the screened municipalities; at that point it was decided to end the trial and to invite all women in the two counties to be screened.

Gøtzsche and Olsen were unhappy with the trial. One of their complaints was about the way the municipalities had been randomised. There were some unexpected variations between the two samples: for example, the average age of the women selected for screening and the ones who weren’t differed by five months. Olsen and Gøtzsche didn’t argue that this difference affected the results; their point was that since there shouldn’t be any detectable differences between two randomly allocated samples of this size (133,000 women), the fact that there was a difference cast suspicion on the randomness of the allocation. But there was an even greater worry. Mortality data is very reliable, because deaths are always documented. Data concerning what people die of is less reliable, because assigning cause of death is to some degree subjective: what, for example, should be given as the cause of death in the case of an elderly patient who dies of an infection shortly after being treated for breast cancer? In the Two Counties trial there was no difference in the number of breast cancer patients who died between the control group and the screened group, only a difference in what they were recorded as dying of. The people running the trial had to review the notes for every breast cancer patient who died and decide whether or not she should be classified as having died of breast cancer. In a blind trial, when they made that judgment they wouldn’t have been in a position to know whether the patient was in the control group or the screened group, but in the Two Counties trial, they did know.

Such shortcomings led Gøtzsche and Olsen to argue that none of the trials that had produced results favourable to screening (New York, the Two Counties trial, Edinburgh, Stockholm, Göteborg) could be trusted; only the two trials (in Malmö and Canada) that failed to find any benefit from screening were sound. The UK Age trial results weren’t published until later, in 2006. Gøtzsche’s conclusions weren’t popular; he responded by dismissing the consensus in favour of screening as a conspiracy of vested interests. In 2011, Mike Richards, the national cancer director at the Department of Health, commissioned a review of the evidence on breast cancer screening, to be conducted by experts with no prior involvement in the field. The panel, whose report was published in 2012, found that while there were problems with many of the trials, the resulting biases would be expected to lead to different errors: the best strategy, it decided, was to include all but the most compromised trials in the meta-analysis. Its best estimate of the benefit of screening was that in the over-fifties it reduced deaths from breast cancer by 20 per cent.

The second vital question about breast cancer screening is: how much harm does it do to healthy women? Screening involves giving a dose of radiation to generally healthy women. The compression of the breast during the test is painful and false positive results can provoke anxiety. But the more serious problem is ‘over-diagnosis’. This is not the same as misdiagnosis. If a healthy woman is mistakenly identified as having breast cancer, that is a misdiagnosis. When a woman has breast cancer but doesn’t need to know that she has it, that is overdiagnosis. Oncologists talk about a tumour’s ‘sojourn time’, the period of time between the point at which it becomes detectable at screening and the point at which it would be detected anyway. In some cases of breast cancer the sojourn time can be very long, so long that the cancer will never be detected. Any woman who dies of another cause during the sojourn time would have been better off not being screened. For such women, a positive mammogram means they are likely to receive harmful and mutilating treatment that can only reduce their life expectancy.

Over-diagnosis is an inevitable consequence of screening. But if screening is catching many cancers that would grow slowly, or not at all, in an age group that is at significant risk of death from other causes, the over-diagnosis rate may be so high that screening is doing more harm than good. Given that a large proportion of screen-detected cancers are DCIS, there is clearly potential for substantial over-diagnosis. We can estimate the rate of over-diagnosis by comparing the incidence of cancer in unscreened and screened populations: the rates before and after the introduction of a screening programme, for example. Gøtzsche analysed the change in incidence after the UK screening programme began and estimated over-diagnosis at 57 per cent. In other words, he found that approximately one cancer in three is an artefact of screening rather than a potential life saved.

The UK panel held that the best way to estimate over-diagnosis was to compare detection rates in the screened and unscreened groups of women who had taken part in the randomised controlled trials. Some of the difference would be the result of over-diagnosis, but part would be due to early detection. In order to distinguish between the two, you need to continue to compare the groups after the end of the trials, because more cancers in the unscreened group will be revealed. The problem is that when most of the trials ended, screening was offered to the women in the control groups, skewing the figures. The panel therefore looked only at the Canadian trial and one of the Swedish trials, in which no screening was offered. The data from these trials suggested that the excess incidence in the screened population was only 11 per cent of the long-term expected incidence, much lower than Gøtzsche’s result.

The findings of the UK panel were widely seen as an endorsement of screening. After all, it found that a substantial number of lives were saved, and the rate of over-diagnosis was comparatively low. The panel’s focus, understandably, was on the most authoritative evidence: the data from the randomised controlled trials. But these trials were, for the most part, conducted in the 1980s or even earlier. Any progress since then in treating advanced cases – and there has been progress – reduces the benefits of earlier detection. Also, any improvements in the sensitivity of mammography increase the risk of over-diagnosis. It’s possible that such changes render findings derived from old trial data irrelevant. When more recent data are used, adjustments must be made to take account of the fact that the data weren’t acquired in the controlled setting of a clinical trial. But what one person sees as a necessary adjustment, another thinks of as fiddling the figures. As an illustration, compare Gøtzsche’s estimate of over-diagnosis in the UK screening programme with that of another statistician, Stephen Duffy.

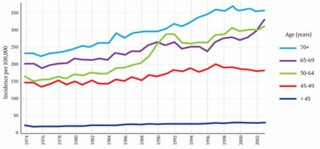

This graph shows the incidence of breast cancer in women of different ages in the UK from 1974 to 2003. The key feature is the unmistakeable spike in cases in the 50-64 age-group, which followed the introduction of the screening programme between 1988 and 1990. To calculate how much of that increase is due to over-diagnosis we must take account of at least two other factors. One is the gradual overall increase in cases of breast cancer, which can be seen on the graph in the years leading up to 1988 and, it would be reasonable to assume, would have continued along the same trajectory had screening not been introduced. Gøtzsche calculates the expected number of cases using data from the years 1971 to 1984; he ignores the years from 1985 to 1988 on the basis that some screening was available by 1985 and there was already evidence by then of an upturn in diagnosis. Duffy argues that this exclusion is unwarranted and that its effect is to exaggerate the amount of over-diagnosis.

The second factor is the impact of early diagnosis. There is a spike partly because, in the years after screening was introduced, cancers were being found that would otherwise have been detected a year or two later. The number of cancers that fall into this category can be estimated from the later ‘compensatory drop’ in detection rates that should be observed once women leave the screening age group. Duffy sees clear evidence of this both in the 65-69 age group, where incidence fell after 1988 until 1996, when it began to rise, increasingly rapidly after 2002, when the upper age-limit for screening was raised to 70; and in the over-70s, where from 1996 on, although there is no downturn, incidence is lower than would be expected from the pre-1988 trend. He estimated that 25,042 extra tumours had been found as a result of screening in the age range 45-64, and 18,981 fewer when those same women were aged 65 years and over. Gøtzsche, conversely, claims emphatically that there is no compensatory drop and that Duffy’s adjustment is ‘funny numbers’. The contrast is stark. Whereas Gøtzsche estimates that one cancer in three is over-diagnosed, Duffy suggests the figure is only one in 28. It is easy to see why the UK panel felt obliged to fall back on thirty-year-old clinical trials.

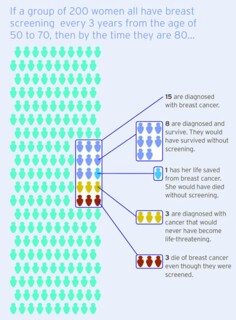

Gøtzsche has also criticised screening programmes for using misleading statistics. If you are told that screening reduces breast cancer deaths by 20 per cent you will probably feel that is a substantial reduction. But the same information, put differently, seems less impressive: the 20 per cent reduction means that the likelihood that you will die of breast cancer while in the screened age-group drops from 2.1 per cent to 1.7 per cent. A further example: the UK panel’s headline figure for over-diagnosis was obtained by calculating excess incidence as a proportion of the long-term expected incidence. It isn’t surprising that the panel would think this way: their perspective is that of researchers studying populations. But a woman deciding whether or not to be screened is more likely to want to know what proportion of cancers detected at screening are over-diagnosed. To get that figure, we exclude cancers found after the woman has left the screening age-group, in which case the excess incidence is higher: 20 per cent rather than 11 per cent.

As part of a drive to improve the public understanding of screening, the NHS commissioned independent researchers to build a website – Informed Choice about Cancer Screening – which presents these risks in a simple graphic (above). The figures used are the estimates of the UK panel. But the clarity of this image risks concealing the uncertainties about screening. New randomised trials might help. It is hard, however, to see how a trial could be conducted in a country that already has a screening programme, and funding bodies might also be reluctant to back it, in the belief that breast cancer research could easily lead to a breakthrough that would invalidate any trial’s conclusions. Good progress is being made in understanding the mechanisms by which cells mutate to become, first, DCIS and then invasive cancer, and there is hope of a test that would predict the invasive potential of a cluster of DCIS cells. An obvious target for research is to identify a molecular signature that differentiates DCIS that requires treatment from DCIS that doesn’t yet. That signature hasn’t yet been discovered; there is, it seems, no single change in the DNA of DCIS cells that predicts progression. However, recent research suggests that there may be a distinctive profile in aberrant cells that are not part of the tumour but are under its control, and that this may be a marker for invasiveness. If treatment decisions could be based on a more accurate estimate of the likelihood that a cancer will spread, the risk of over-diagnosis would be dramatically reduced.

For the moment, though, we must make the best of the evidence we have. Ideally, we would make impartial assessments based on the facts presented by dispassionate experts. In practice, it isn’t possible, at least from their published articles, to check all the details of the calculations carried out by Gøtzsche and Duffy. Some of Duffy’s criticisms are contradicted in Gøtzsche’s subsequent publications. If Duffy says Gøtzsche cheated by using a single year to measure the post-screening incidence when he should have averaged over the period, and Gøtzsche then says that isn’t true, that he does average over the period, how do you decide who is right? Gøtzsche devotes a chapter of his book to his opponents’ ad hominem attacks, yet his own style is aggressive and even nasty: he mentions, for example, how odd it was that the Swedish Two Counties trial, at the time the largest piece of public health research carried out in the country, should have been entrusted to a Hungarian. That sort of thing leaves me wanting to side with Duffy, but that isn’t the way science is supposed to work. In the end the question of whether or not breast screening should continue will be determined by politicians’ assessments of what the public wants. And the public seems to want screening. When asked about over-diagnosis, most women say that rates even as high as 30 per cent wouldn’t stop them having a mammogram.

Send Letters To:

The Editor

London Review of Books,

28 Little Russell Street

London, WC1A 2HN

letters@lrb.co.uk

Please include name, address, and a telephone number.